Time Series Introduction with R codes

1 What is a Time Series

A set of observed values ordered in time, or we can say, repeated measurement of something usually with the same fixed interval of time (hourly, weekly, monthly).

A collection of observations made sequentially in time[1].

If the variable we are measuring is a count variable, we may have a Poisson Time Series (that is for later).

A time series

Most of the classic statistical theory is based on the assumption of sample randomness and independent observations. On the other hand, time series is just the opposite. Observations are usually dependent on previous values, and their analysis must take into account their temporal order.

For example, a prospective cohort study comparing “injury rate before and after” an implemented program, analyses of time trends, such as Poisson regression and time series analysis, considers the variability that occurs over the study period apart from the change associated with the intervention. They also avoid the loss of information about variability in incidence over time when rates are aggregated into one before and one after rate. The population is its own control.

If previous observations can predict future observations exactly, we have a deterministic process. However, the exact prediction is usually impossible since past values determine only part of the future value. In this case, we say the process is stochastic, and the future values must be seen as a probability conditioned on the past values.

There are many models available to describe the behavior of a particular series. The choice of such a model depends on factors such as the behavior of the phenomenon or the prior knowledge of its nature and the purpose of the analysis.

1.1 Types of Time Series

The R base installation already gives us lots of datasets to work on time-series.

For this article I’ll first load the MASS package that contains some of the dataset we will use.

We can list all datasets available with the function data() from package utils.

library(MASS)

data() # the output is not shown, but you can check in your RStudioMeasured at regular time intervals (discrete), examples:

- Economic: stock market;

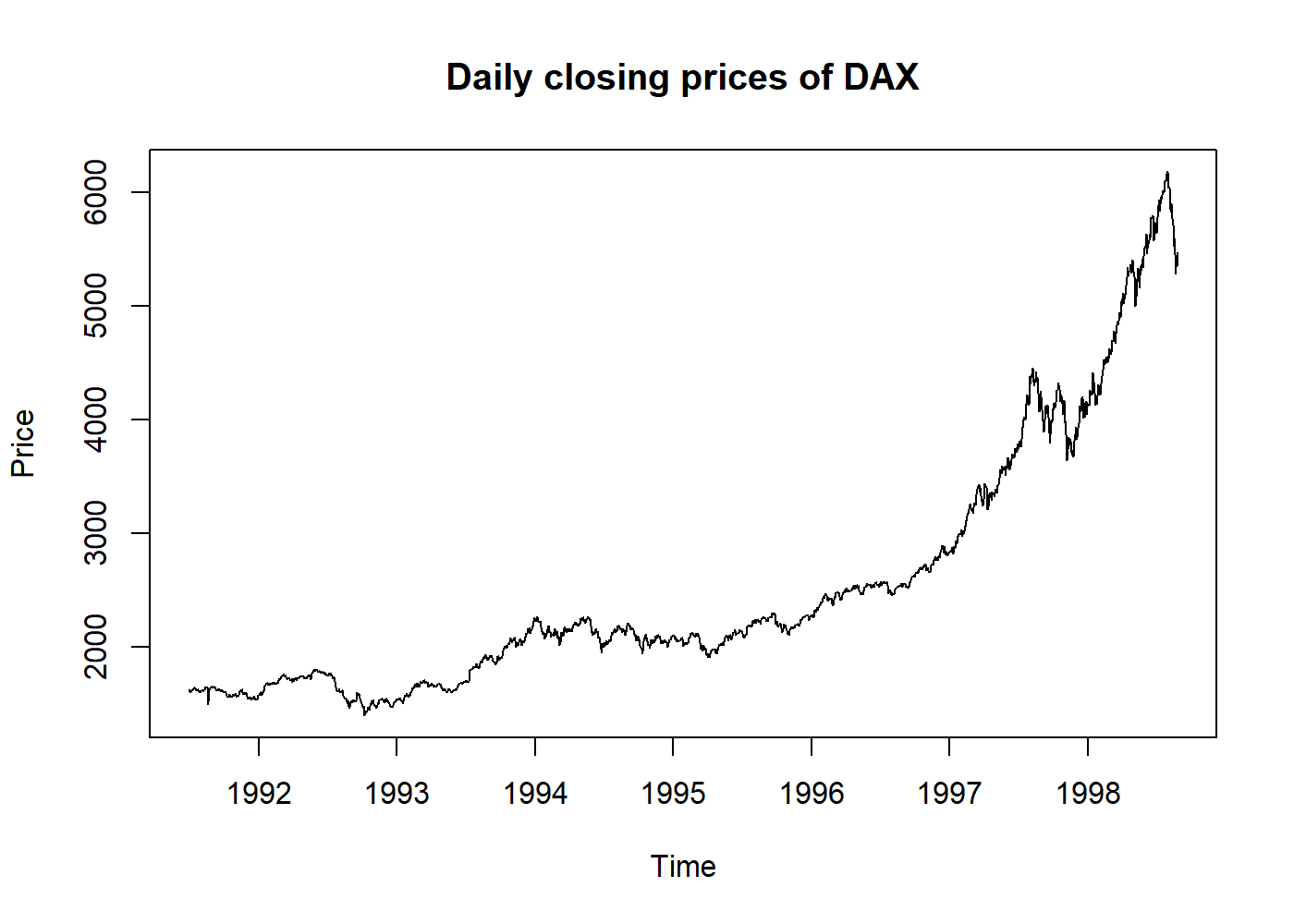

plot(EuStockMarkets[, 1], main = "Daily closing prices of DAX", ylab = "Price")

- Physical/Biological: Pluviometry, DNA;

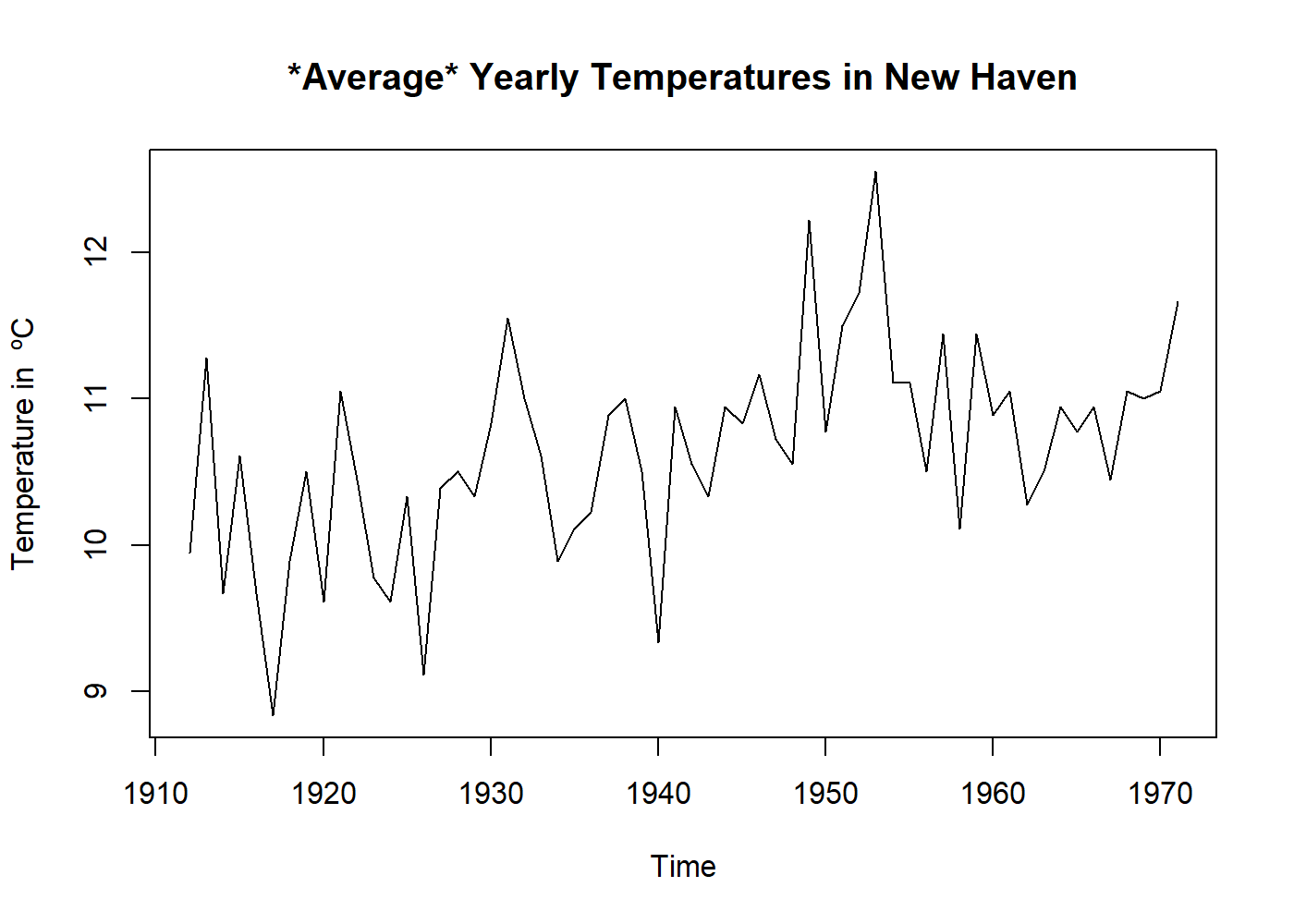

plot(((nhtemp) - 32) * 5 / 9, main = "*Average* Yearly Temperatures in New Haven", ylab = "Temperature in ºC")

- Marketing: sales per month;

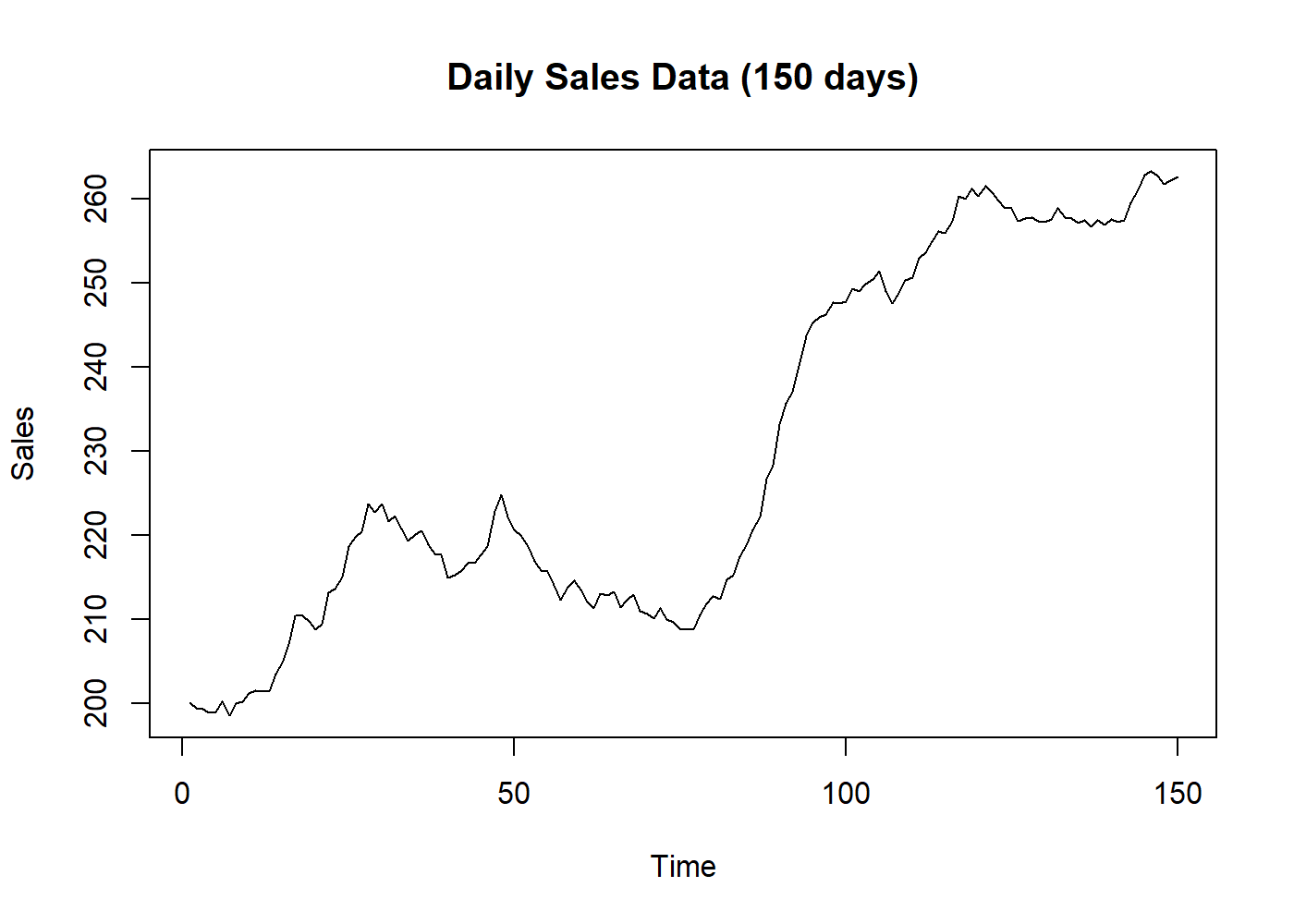

plot(BJsales, main = "Daily Sales Data (150 days)", ylab = "Sales")

- Demographics: population per year, car accidents per day;

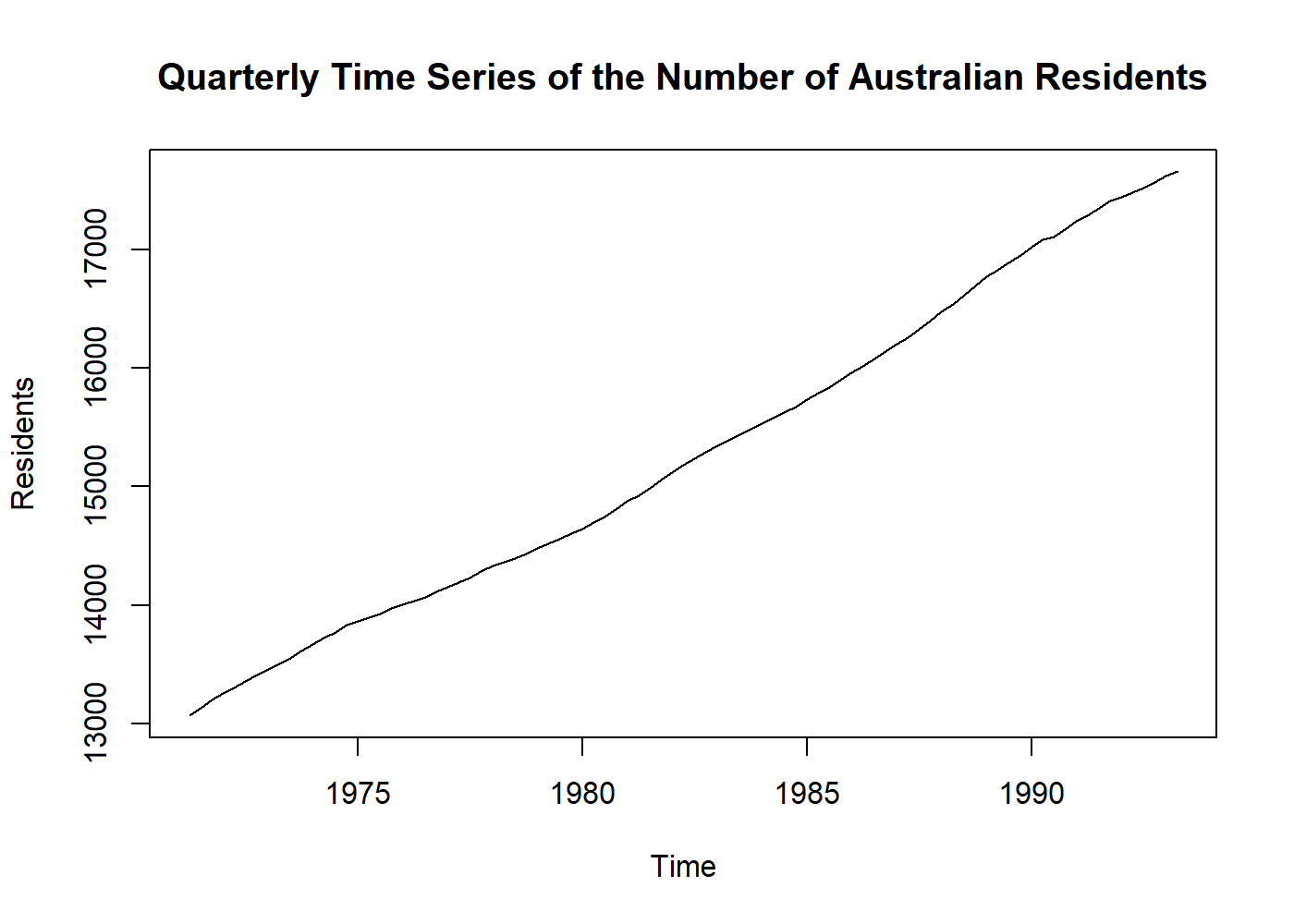

plot(austres, main = "Quarterly Time Series of the Number of Australian Residents", ylab = "Residents")

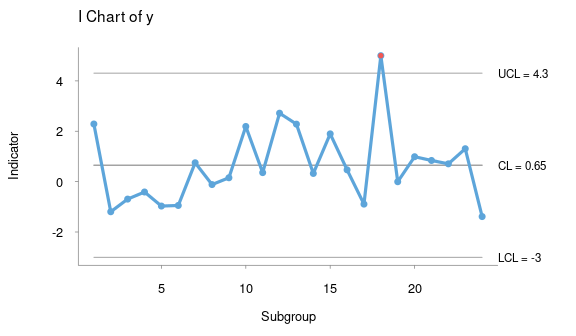

- Process control: factory measurements like final can weights, quality scores;

knitr::include_graphics("control_chart.png") # here I borrowed an image, sorry.

image from package

qichartsMeasured at irregular time intervals (events), examples:

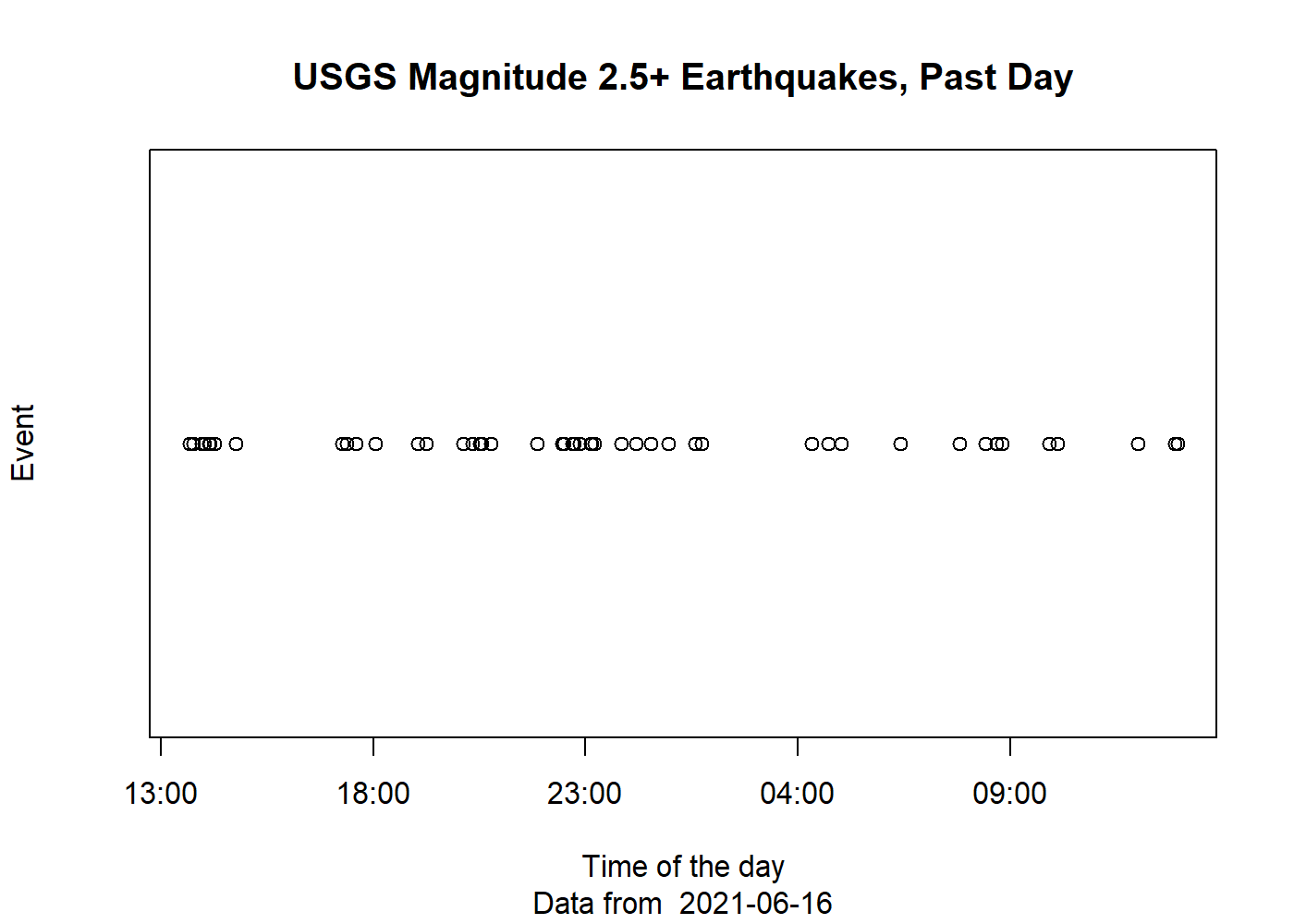

- Point processes: earthquakes (events)

eq_data <- readr::read_csv("https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/2.5_day.csv") eq_data$yes <- 0 plot(eq_data$time, eq_data$yes, main = "USGS Magnitude 2.5+ Earthquakes, Past Day", ylab = "Event", xlab = "Time of the day", yaxt = 'n', sub = paste("Data from ", Sys.Date()))

Data downloaded from usgs.gov

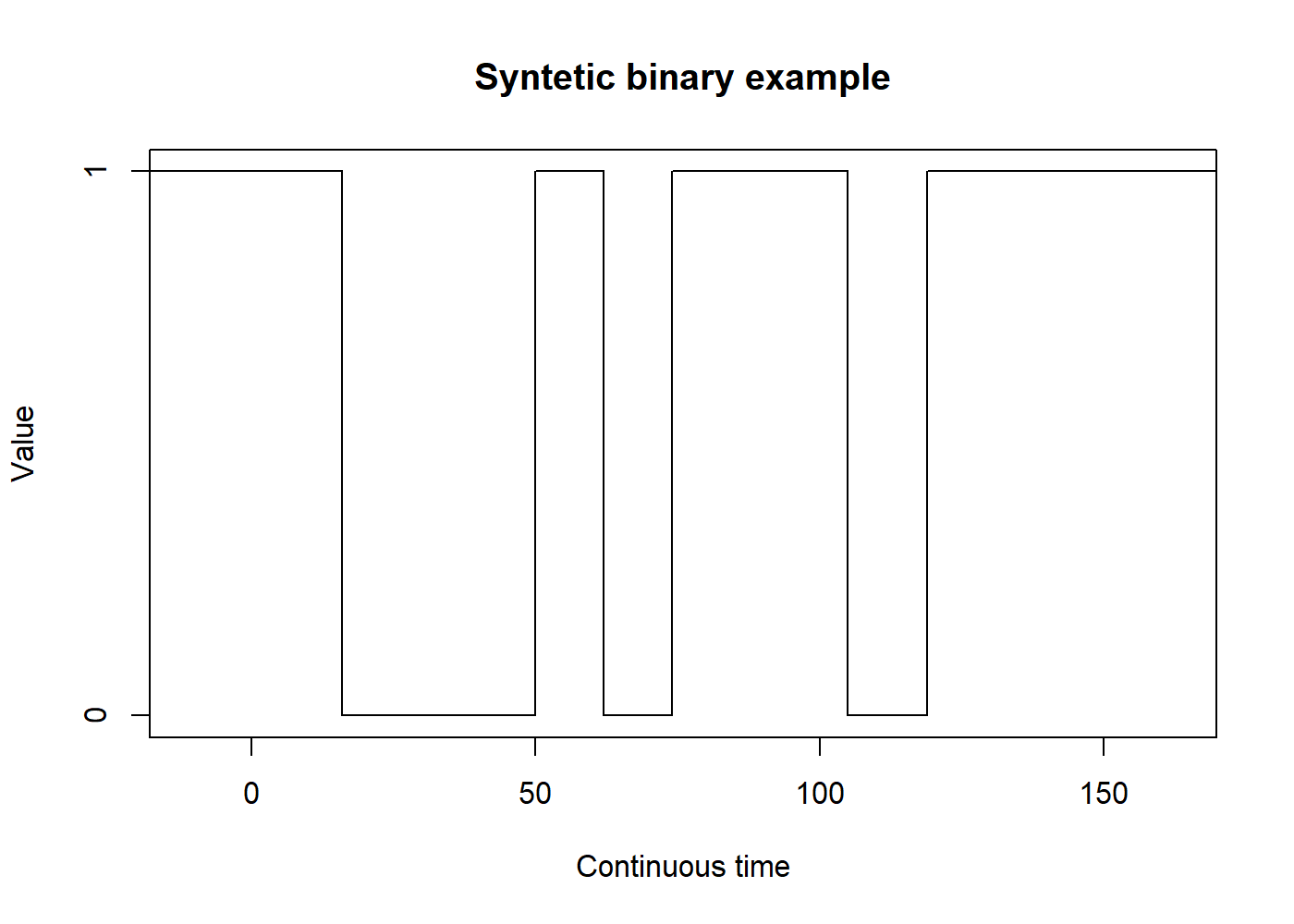

Measured continuously, examples:

- Binary processes: communication theory (turn-on/turn-off, zeros and ones);

set.seed(2114) binary_data <- unlist(replicate(10, rep(runif(1) < 0.5, floor(runif(1, 10, 20))))) binary_data <- stepfun(seq_len(length(binary_data) - 1), binary_data) plot(binary_data, main = "Syntetic binary example", ylim = c(0,1), ylab = "Value", xlab = "Continuous time", do.points = FALSE, yaxp = c(0, 1, 1))

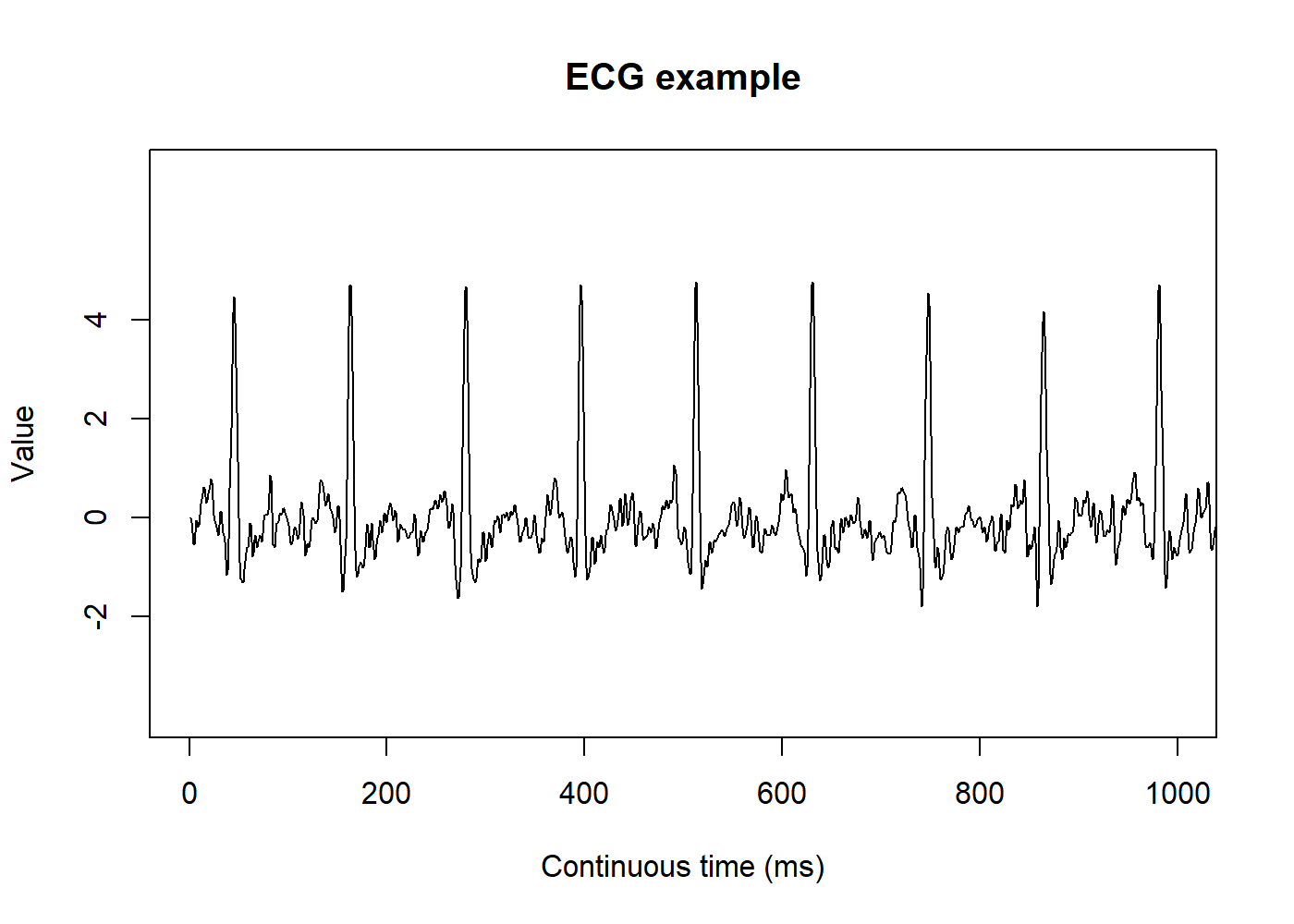

- Analog signals: sound, temperature, humidity, ECG1.

ecg_example <- readr::read_csv("ecg_example.csv") # this data you can find on physionet plot.ts(ecg_example, main = "ECG example", ylim = c(-4, 7), yaxp = c(-2, 4, 3), ylab = "Value", xlab = "Continuous time (ms)", xlim = c(0, 1000))

Data from Physionet, record

a103l

2 The goals of Time Series Analysis

2.1 Description

Like any kind of data analysis, the first step is to know the data. It is imperative to plot a time series before trying to analyze it. This simple step will show us any noticeable trend or seasonal variation and allow us to spot outliers2 or some turning point in the data, which may require the use of more than one mode to fit each part of the data.

2.2 Explanation

When we have multiple variables collected simultaneously, it may be possible to find some correlation between them. The variation of one time series may explain the variation in another time series. Multiple regression models may be helpful here. We can also convert an input series into an output series by a linear operation and try to understand the relationship between both series.

2.3 Prediction

Using the previously available data, we may want to predict the future values of that series. Historically, the terms “prediction” and “forecasting” may be used interchangeably or not. Thus it is essential to pay attention to how the literature is referring to both terms. Sometimes “prediction” may refer to subjective methods or the procedure to achieve the “forecasting” (the objective method or the actual future values). There is a close relationship between prediction and control problems where manufacturing processes that are going to move off-target can be proactively corrected.

2.4 Control

When the time series is originated from measures of “quality” of a manufacturing process, the objective of the analysis is to control this process. There are specific methods to do such control, and this topic is outside the scope of this article. Further information is available on specific literature called Statistical Quality Control[3].

3 Before Modeling Time Series

3.1 Transformations

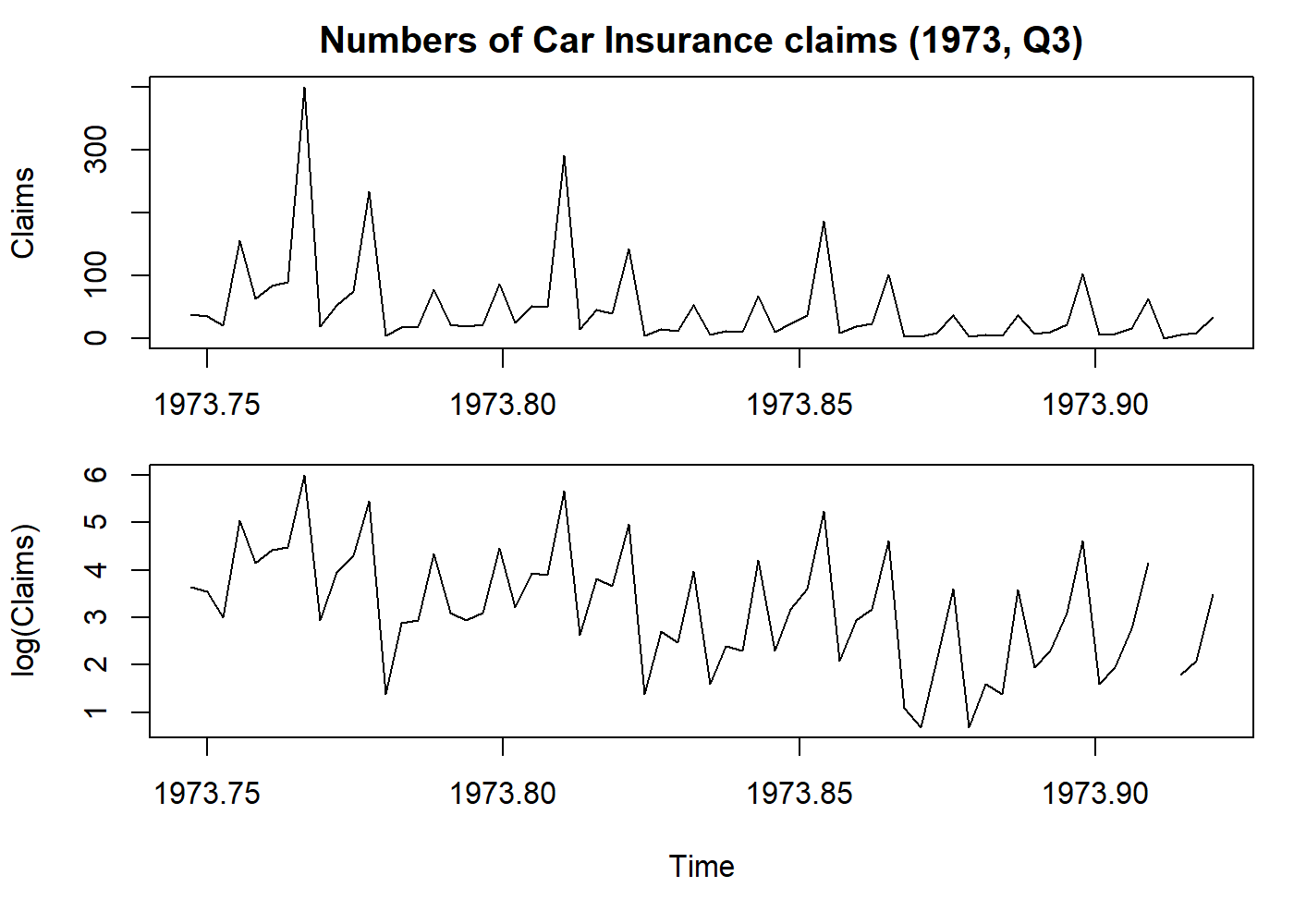

- Stabilize variance: a logarithmic transformation may reduce the variance;

# I'll explain this line once: this configures the output to hold two plots.

# And we store the old config. The last line is when we restore the config.

oldpar <- par(mfrow = c(2, 1), mar = c(3.1, 4.1, 2.1, 1.1))

# Get only the Claims column and let's transform that in a time series, with anual frequency

claims <- ts(Insurance$Claims, start = c(1973, 365.25*3/4), frequency = 365.25)

plot.ts(claims, main = "Numbers of Car Insurance claims (1973, Q3)", ylab = "Claims")

par(mar = c(5.1, 4.1, 0.1, 1.1))

plot(log(claims), ylab = "log(Claims)") # here we log-transform the data

par(oldpar)

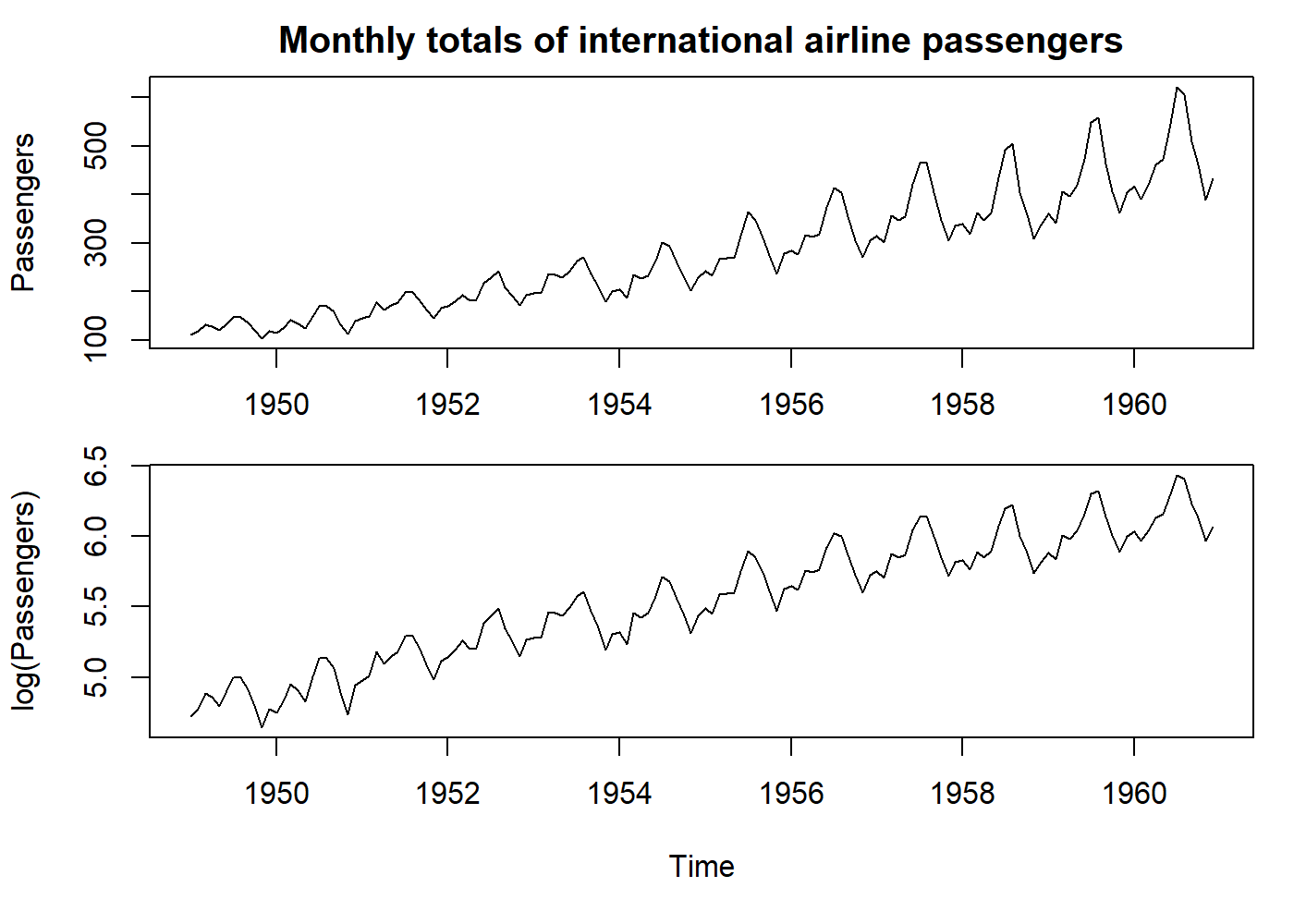

- Make seasonality additive: a logarithmic transformation transforms a multiplicative seasonality into an additive one. However, this will only stabilize the variance if the error term is also multiplicative.

oldpar <- par(mfrow = c(2, 1), mar = c(3.1, 4.1, 2.1, 1.1))

plot(AirPassengers, main = "Monthly totals of international airline passengers", ylab = "Passengers")

par(mar = c(5.1, 4.1, 0.1, 1.1))

plot(log(AirPassengers), ylab = "log(Passengers)")

par(oldpar)

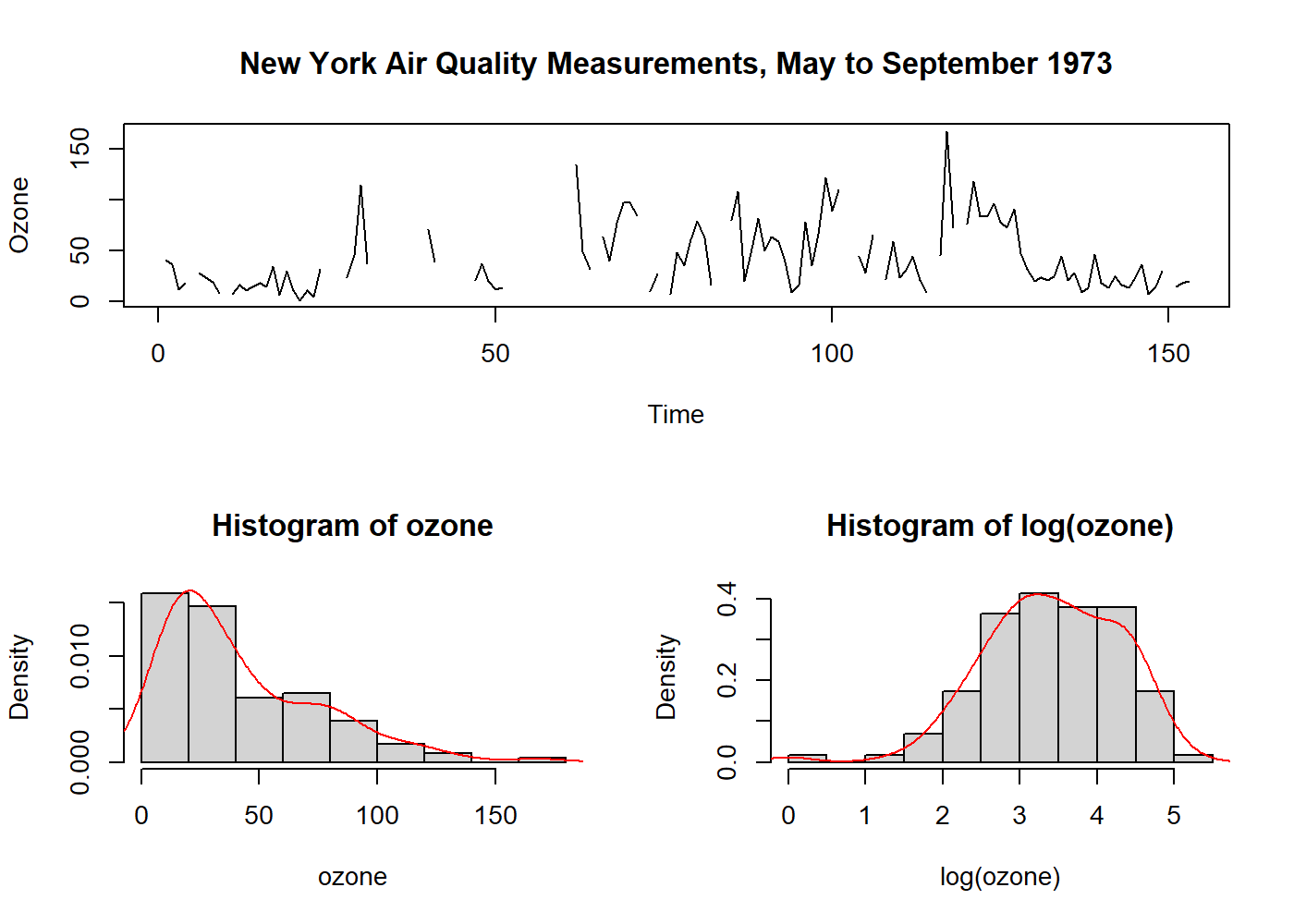

- Normalize data distribution: data is usually assumed to be normal. Logarithmic and square-root are used; however, they are just special cases of the Box-Cox transformation. The parameters may be estimated by inference, and in general, the transformation cannot overcome all requirements at the same time.

layout(mat = matrix(c(1, 1, 2, 3), ncol = 2, byrow = TRUE)) # just plot format

plot.ts(airquality$Ozone, main = "New York Air Quality Measurements, May to September 1973", ylab = "Ozone", xlab = "Time")

# Here we get the Ozone values, and remove the NA's so we can make 'statistics'

ozone <- ts(na.omit(airquality$Ozone))

hist(ozone, probability = 1); lines(density(ozone), col = "red") # data distribution

hist(log(ozone), probability = 1); lines(density(log(ozone)), col = "red") # fairly normalized

It is interesting to note that Nelson and Granger, in 1979, found little benefit in applying a general Box-Cox transformation in several datasets. Usually, it is advised to apply the least possible transformations, except when the variable has a direct physical interpretation.

3.2 Dealing with Trends

Trends in time series are difficult to define and have more than one “formal” definition in literature. Loosely we can say that trend is a “long-term change in the mean level.” The main problem is how to define “long-term” in every situation.

The practical importance of a time series with a trend depends on whether we want to measure the trend and/or remove the trend so we can analyze the higher frequency oscillations that remain.

It is important to remember that sometimes we may be more interested in the trend than what is left after removing it.

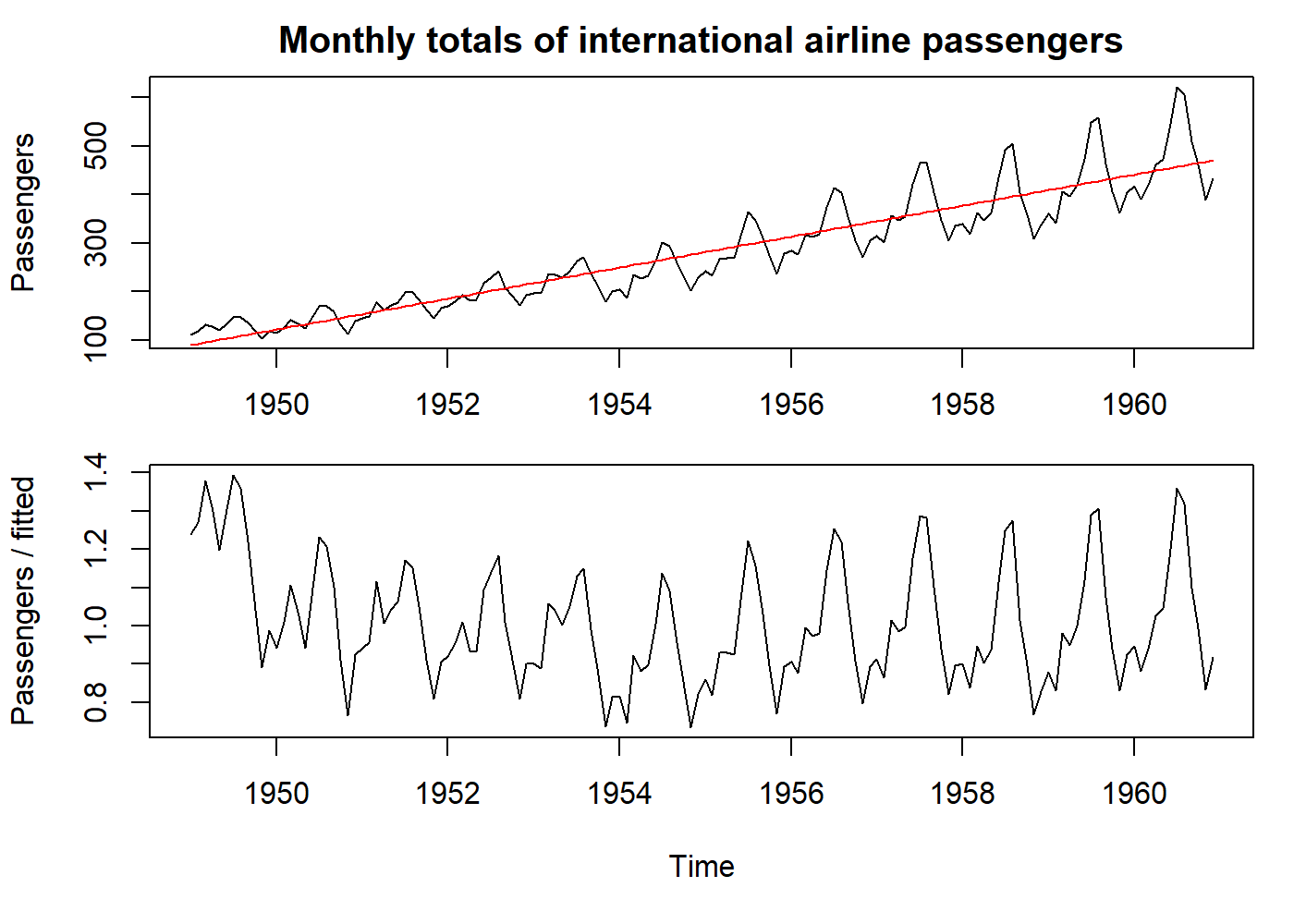

3.2.1 Curve Fitting

This technique is not more than removing the trend and analyze the “residuals.” In general, particularly for yearly data, we can use a simple polynomial curve.

oldpar <- par(mfrow = c(2, 1), mar = c(3.1, 4.1, 2.1, 1.1))

# Our old friend, AirPassengers dataset. See how it increases both the mean and the variance.

plot(AirPassengers, main = "Monthly totals of international airline passengers", ylab = "Passengers")

# Let's just fit a line on the data

fit <- lm((AirPassengers) ~ seq_along(AirPassengers))

pred <- predict(fit, data.frame(seq_along(AirPassengers)))

pred <- ts(pred, start = start(AirPassengers), frequency = frequency(AirPassengers))

# `pred` contains our line based on the simple linear model we did.

data <- AirPassengers / pred # why divide and not subtract? because the variance also increases (we have a multiplicative seasonality)

lines(pred, col = "red")

par(mar = c(5.1, 4.1, 0.1, 1.1))

plot(data, ylab = "Passengers / fitted")

par(oldpar)

3.2.2 Filtering

Filtering is a little more complex operation than curve fitting. We are not just trying to find a polynomial that best fits our data, but we are transforming our original TS into another TS using a formula (that here we call filter). This filter can be one of several kinds of “Moving Averages,” locally weighted regressions (e.g., LOESS), or “Splines” (a piecewise polynomial). One caveat of these smoothing techniques is the end-effect problem (since in one end of the time series, we do not have all the values to compute, for example, the moving average).

Simple moving average ex:

Example with splines:

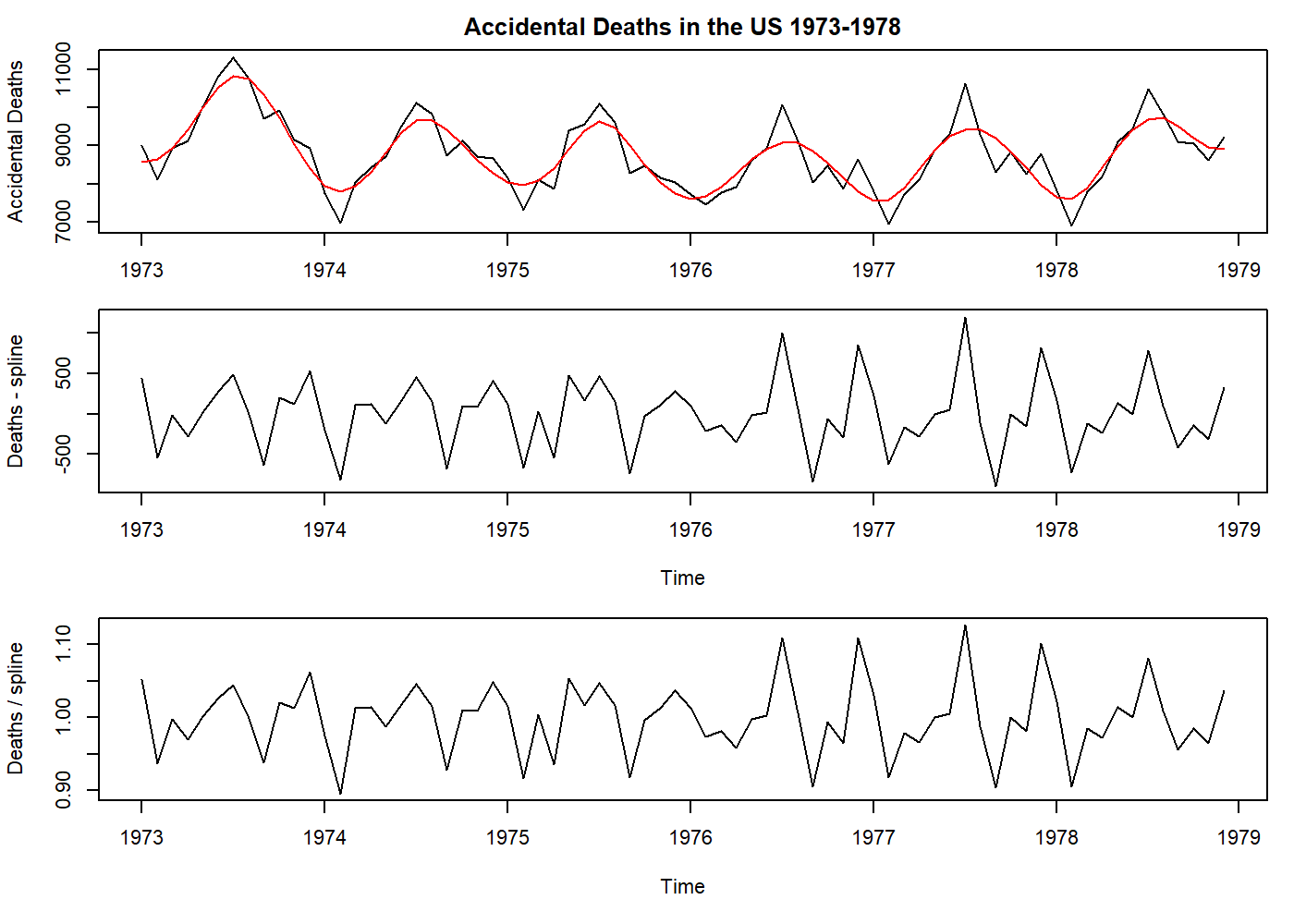

oldpar <- par(mfrow = c(3, 1), mar = c(3.1, 4.1, 2.1, 1.1))

plot(USAccDeaths, main = "Accidental Deaths in the US 1973-1978", ylab = "Accidental Deaths")

# here is another way to "fit a line". The number of knots is your choice, but an old Professor once told that 4-5 per year is sufficient.

pred <- smooth.spline(USAccDeaths, nknots = 24)$y

pred <- ts(pred, start = start(USAccDeaths), frequency = frequency(USAccDeaths))

lines(pred, col = "red")

par(mar = c(5.1, 4.1, 0.1, 1.1))

data <- USAccDeaths - pred # see how subtraction and division only affects the mean on this case.

plot(data, ylab = "Deaths - spline")

par(mar = c(5.1, 4.1, 0.1, 1.1))

data <- USAccDeaths / pred

plot(data, ylab = "Deaths / spline")

par(oldpar)

3.2.3 Differencing

It is a special kind of filtering, where we compute the difference between the current value and the next.

It is helpful to remove trends, making a TS stationary.

We can use differencing multiple times (we call “orders”), but usually, one (“first-order”) iteration is sufficient.

The mathematical operator used to denote differencing is the “nabla” (

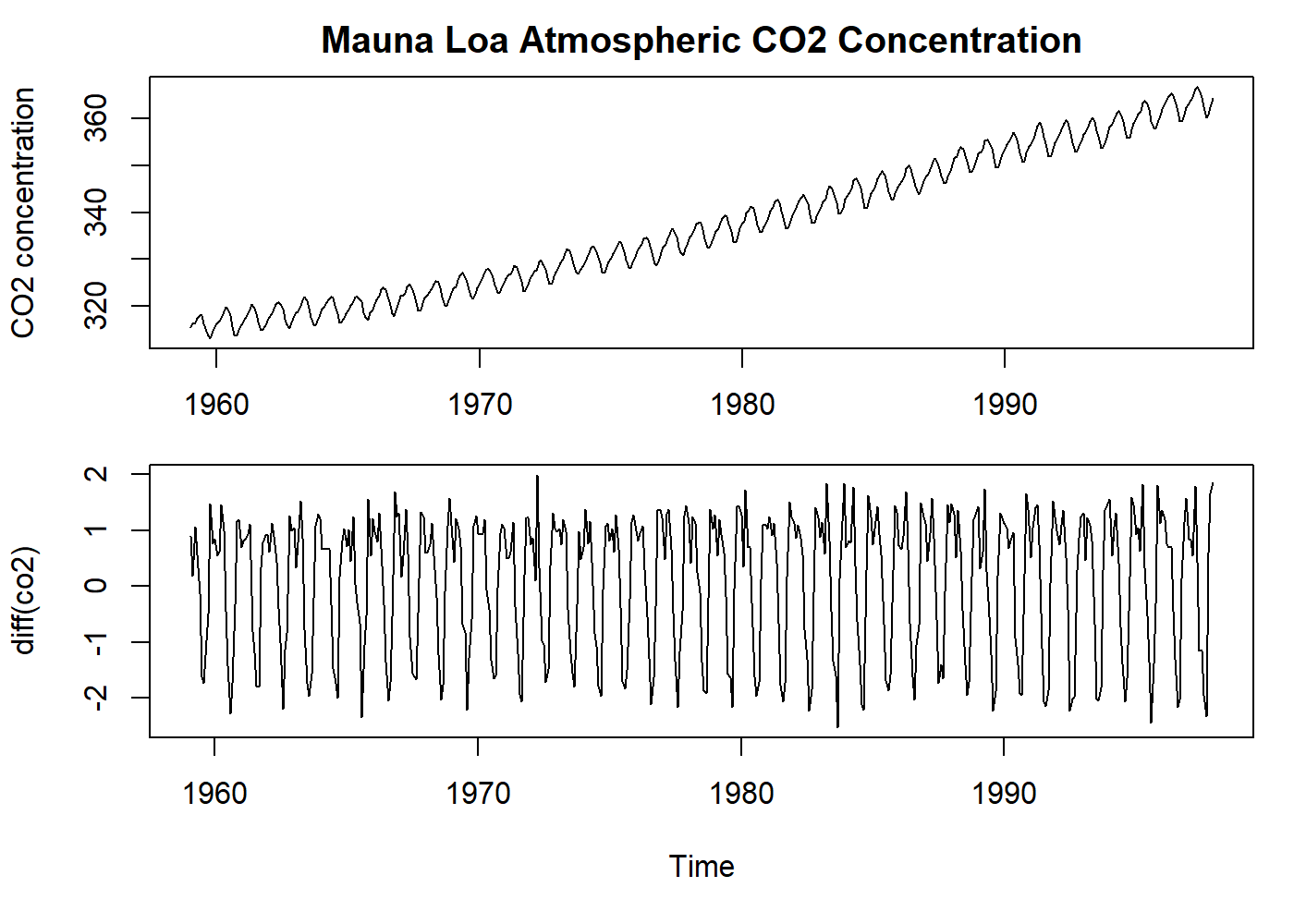

oldpar <- par(mfrow = c(2, 1), mar = c(3.1, 4.1, 2.1, 1.1))

plot(co2, main = "Mauna Loa Atmospheric CO2 Concentration", ylab = "CO2 concentration")

par(mar = c(5.1, 4.1, 0.1, 1.1))

plot(diff(co2), ylab = "diff(co2)") # the first difference removes all the trend!

par(oldpar)

See that the example above removed the trend, but kept the seasonality.

The Slutzky-Yule effect[2]: They showed that by using operations like differencing and moving average, one could induce sinusoidal variation in the data that, in fact, is not real information.

3.3 Dealing with Seasons

As for trends, the analysis of seasonal variation depends on whether we want to measure the seasonal effect and/or remove the seasonality.

For a time series with a slight trend, a straightforward estimate of the seasonal effect is to take the average of every January (for example) and subtract (in additive case) or divide by (in multiplicative case) the average of the year.

For a time series with a significant trend, a more robust approach may be taken. For monthly data, we can use:

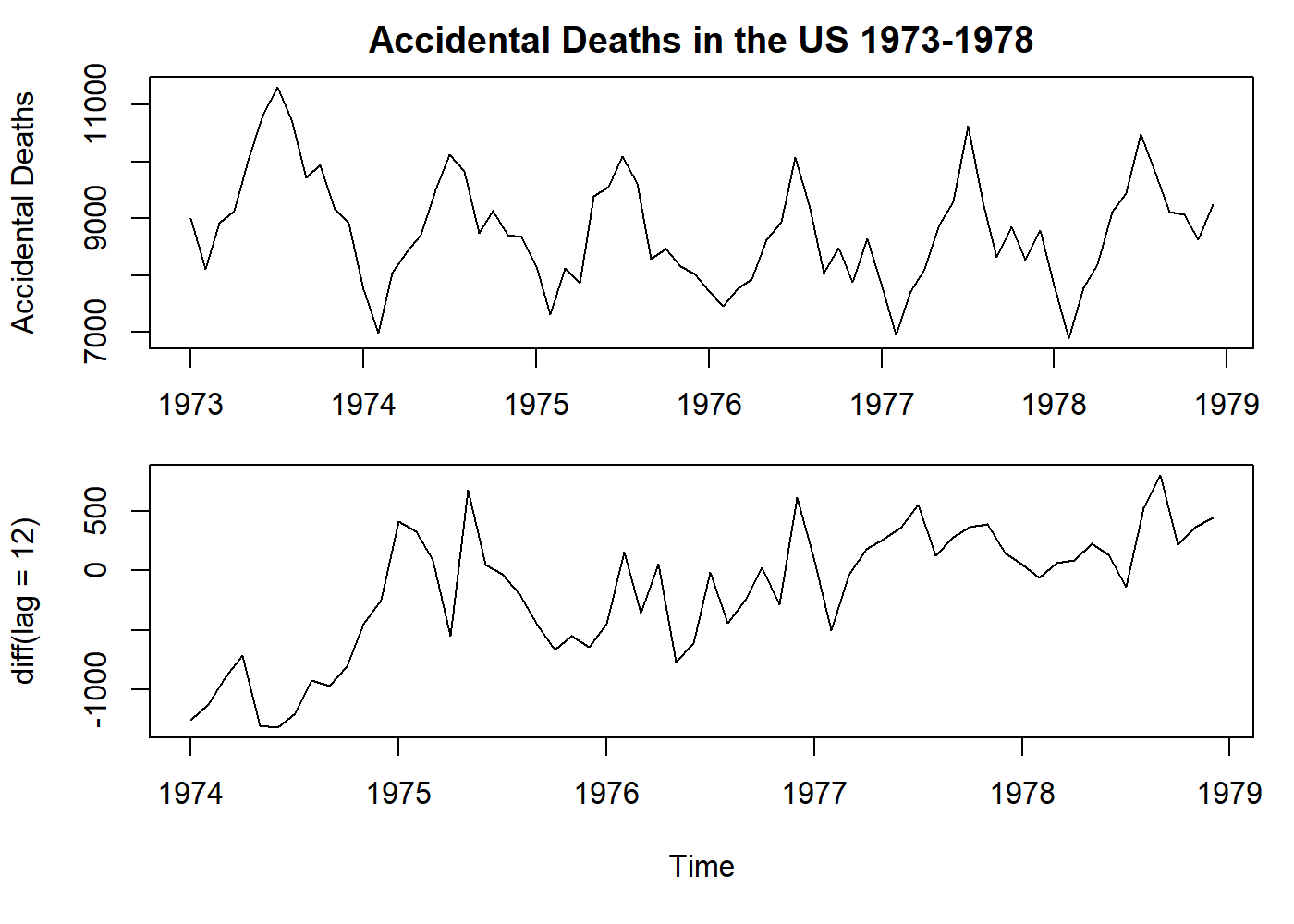

The seasonality can also be eliminated by differencing with lag.

For example, with monthly data, we can use the operator

oldpar <- par(mfrow = c(2, 1), mar = c(3.1, 4.1, 2.1, 1.1))

plot(USAccDeaths, main = "Accidental Deaths in the US 1973-1978", ylab = "Accidental Deaths")

par(mar = c(5.1, 4.1, 0.1, 1.1))

# here still a first degree differencing, but with a leap of 12 months.

# See how we remove the seasonality, but not the trend.

plot(diff(USAccDeaths, lag = 12), ylab = "diff(lag = 12)")

par(oldpar)

See that the example above removed the seasonality, but kept the trend.

3.4 Autocorrelation

It may be the fundamental property of a time series. As pointed before in section 1, time series data has the assumption of non-independence, and autocorrelation is just how we turn this property into an objective and measurable value. Autocorrelation measures the correlation between observations at different time lags. As we may observe, at time zero, the coefficient is one because the observed value totally agrees with itself, and sometimes this coefficient is skipped in some plots. The distribution of these coefficients provides us insight into the probability model that had generated this series. Later in section 4.1, we will write the definition of this property.

3.4.1 The correlogram

The graphic representation of the autocorrelation coefficients is called a correlogram, in which the coefficient

Interpretation. Here we offer some general advice:

Random series: for a large

Short-term correlation: Stationary series usually presents with a short-term correlation. What we see is a large value for

Alternating series: If the data tends to alternate sequentially around the overall mean, the correlogram will also show this behavior: the value of

Non-stationary series: If the data has a trend, the values of

Seasonal fluctuations: If the data contains seasonal fluctuations, the correlogram will display an oscillation of the same frequency.

3.4.2 Testing for randomness

There are valuable tools to test if the data is random or not. For the sake of simplicity, this subject will not be covered in this article. However, testing residuals for randomness is a different problem[1] and will be discussed later.

4 Stochastic Processes

In the real world, most processes have in their structure a random component. The term “stochastic” is a Greek word that means “pertaining to chance.” A more formal definition of a stochastic process is: “a collection of random variables which are ordered in time and defined at a set of time points which may be continuous or discrete”[1].

Most statistical problems are focused on estimating the properties of a population from a sample. In time series, we need to realize that each data point is a “sample” of the “population” at that given time. When we read a value from a sensor (for a plausible example, let us think of an arterial line that measures the blood pressure directly), we read a unique value at that time, not the distribution of values that could be possible read. This infinite set of possible values that could compose our time series is called an ensemble. The actual time series we have is one of the possibly realizations of the stochastic process.

As with other mathematical functions, a simple way to describe the stochastic process (as probability function) is using its moments. The first moment is the mean, and the second moment is the variance (and the autocovariance, for a sequence of random variables).

4.1 Stationary Processes

It is the process that is ready to model. In other words, the previous steps before modeling a time series are to make it stationary. Loosely speaking, a stationary process is a process that has a constant mean, variance, and no periodic variations.

Formally, a we can say a process is strictly stationary if the joint distribution of

are constants independently of the value of

As the autocovariance coefficient depends on the units in which

which measures the correlation between

The reasoning behind the suggestion that the distribution of

Strict stationarity is very restrictive and few processes achieve it.

4.1.1 Second-order Stationarity

A more helpful definition, for practical reasons, is a less restricted definition of stationarity where the mean is constant, and ACVF only depends on the lag. This process is called second-order stationary.

This simplified definition of stationarity will be generally as long as the properties of the processes depend only on its structure as specified by its first and second moments.

4.2 The autocorrelation function

As shown in section 3.4.1, the autocorrelation coefficients are helpful in describing a time series. The autocorrelation function (ACF) is an essential tool for assessing its properties.

Here we will describe the properties of the ACF.

Suppose a stationary stochastic process

4.2.1 Property 1

The ACF is an even function of the lag in that

This property just states that the correlation between

4.2.2 Property 2

for any constants

When

so that

so that

4.2.3 Property 3

Lack of uniqueness. A stochastic process has a unique covariance structure. However, the opposite is not valid. We can find other processes that produce the same ACF, adding another level of difficulty to the sample ACF interpretation. To overcome this problem, we have the invertibility condition that will be described later on Moving average processes.

4.3 Some useful stochastic processes

4.3.1 Purely random process

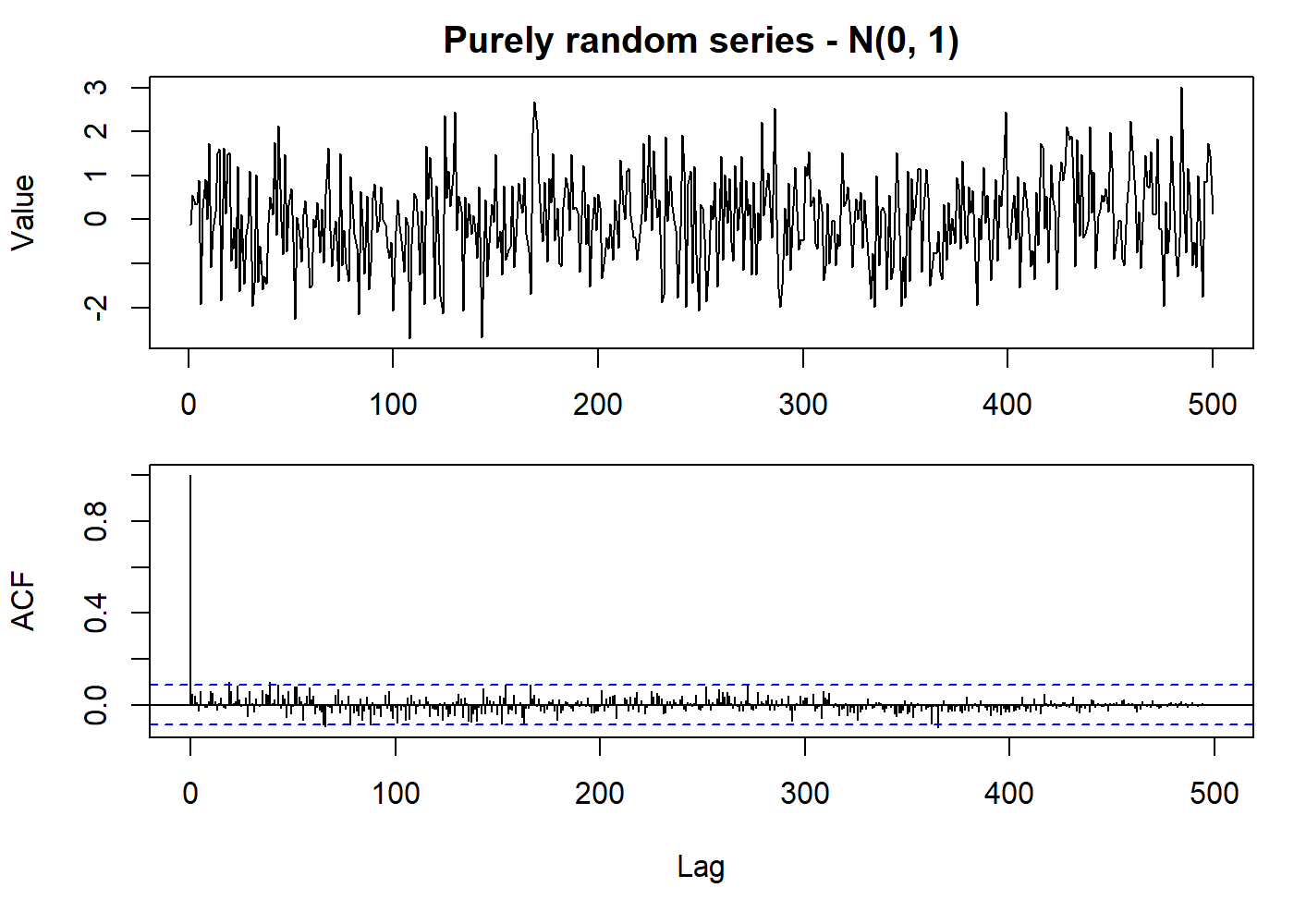

A process can be called purely random if it is composed of a sequence of random variables

Since the mean and ACVF do not depend on time, the process is second-order stationary. In fact, it also satisfies the condition for a strictly stationary process. The ACF is given by

set.seed(2021)

oldpar <- par(mfrow = c(2, 1), mar = c(3.1, 4.1, 2.1, 1.1))

normal_random <- rnorm(500)

plot.ts(normal_random, main = "Purely random series - N(0, 1)", ylab = "Value")

par(mar = c(5.1, 4.1, 0.1, 1.1))

acf(normal_random, lag.max = 1000)

par(oldpar)

In the example above, the ACF function was kept along the entire dataset for academic purposes.

Normally it is shown only the acf {stats} manual).

This type of process is of particular importance as building blocks of more complicated processes such as moving average processes.

4.3.2 Random walk

The Random Walk is a process very similar to the previous process. The difference lies in that the current observation sums the current random variable to the previous observation instead of being independent. The definition is given by

Usually, the process starts at zero for

and

Then we find that

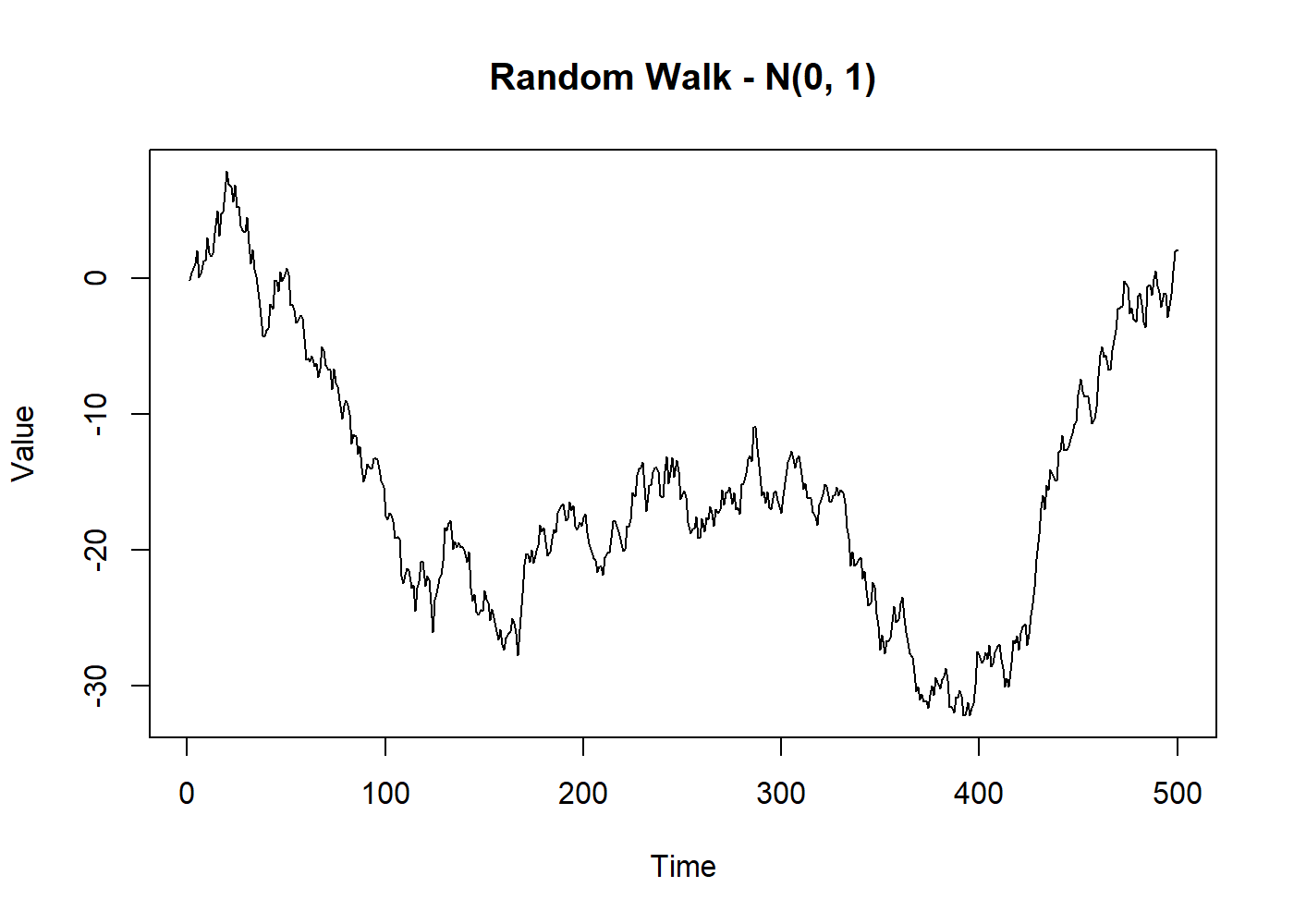

set.seed(2021)

random_walk <- cumsum(rnorm(500))

plot.ts(random_walk, main = "Random Walk - N(0, 1)", ylab = "Value")

Meanwhile, the interesting feature is that the first difference of a random walk forms a purely random process, which is therefore stationary.

set.seed(2021)

random_norm <- rnorm(500)

random_walk <- cumsum(random_norm) # same from the last plot

diff_walk <- diff(random_walk)

# below we use 2:500 because with diff, we lose the first observation

all.equal(diff_walk, random_norm[2:500])

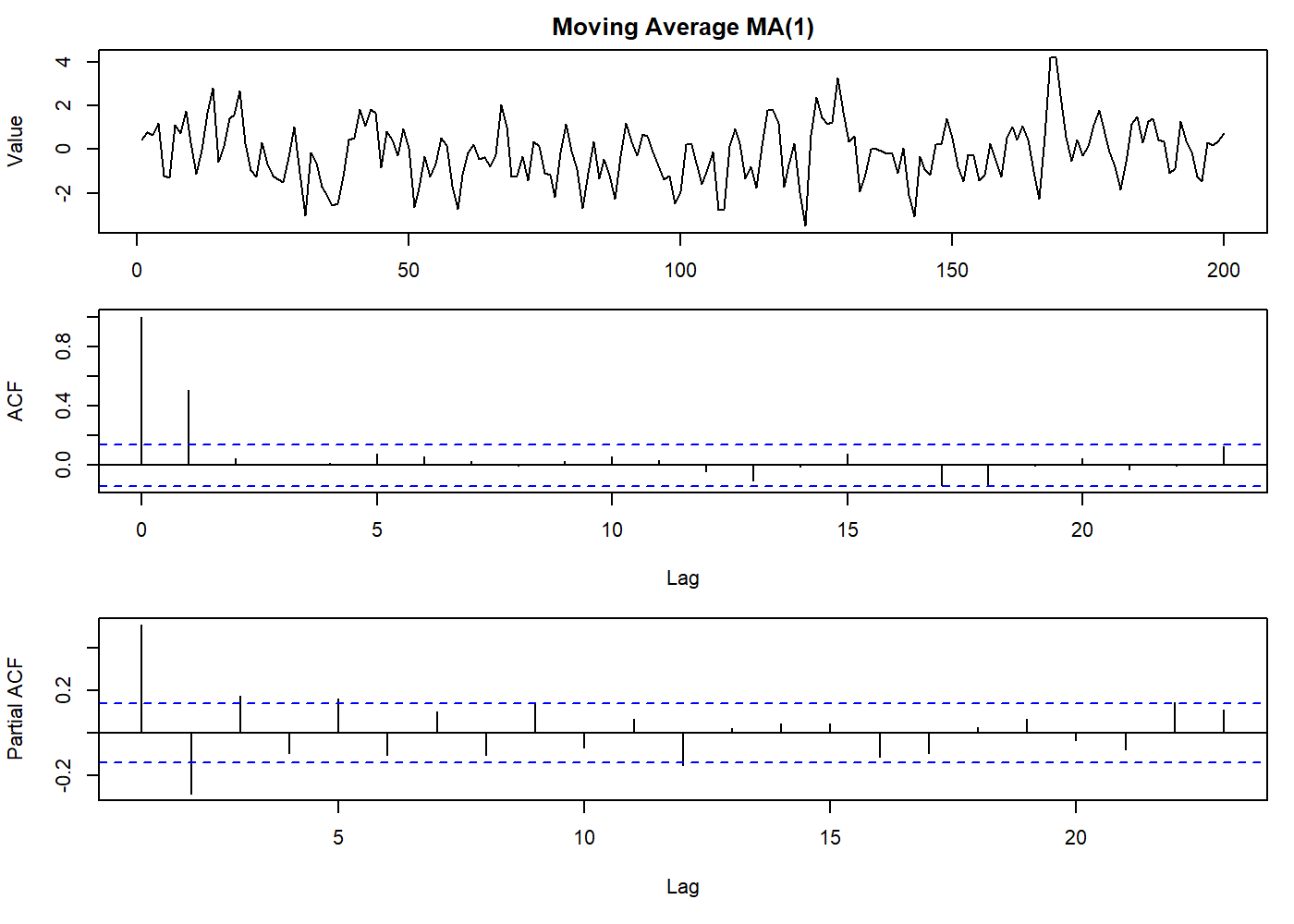

## [1] TRUE4.3.3 Moving average processes

First, not to be confused with the moving average algorithm.

The moving average process is a common approach to model a univariate time series.

The concept is that the current value

The moving average process only remembers the

Here we will say that

where

This equation is very similar to a linear regression

where the dependent process is modeled by an independent process (a purely random process). Here we omit, for simplicity, the “intercept,” that may be a constant added to the end of the right side of the equation. The “intercept” is the overall mean of this process.

Usually, the random process is scaled so that

since the

since

As

The ACF of the

Note that the ACF is “clipped” to zero at lag

oldpar <- par(mfrow = c(3, 1), mar = c(3.1, 4.1, 2.1, 1.1))

set.seed(2021)

mov_avg <- arima.sim(list(order = c(0,0,1), ma = 0.8), n = 200)

plot.ts(mov_avg, main = "Moving Average MA(1)", ylab = "Value")

par(mar = c(5.1, 4.1, 0.1, 1.1))

acf(mov_avg)

par(mar = c(5.1, 4.1, 0.1, 1.1))

pacf(mov_avg)

par(oldpar)

There is no restriction on

4.3.3.1 First-order process

The invertibility issue is shown below, where two different

We can see the problem better if we express those processes putting

In this form, if

To simplify the expression satisfying the invertibility condition, we can use the backward shift operator

Then equation (4.4.3.1) may be written as

where

For example, in the first-order case,

4.3.3.2 General-order case

We can also extend the concept to the general case of

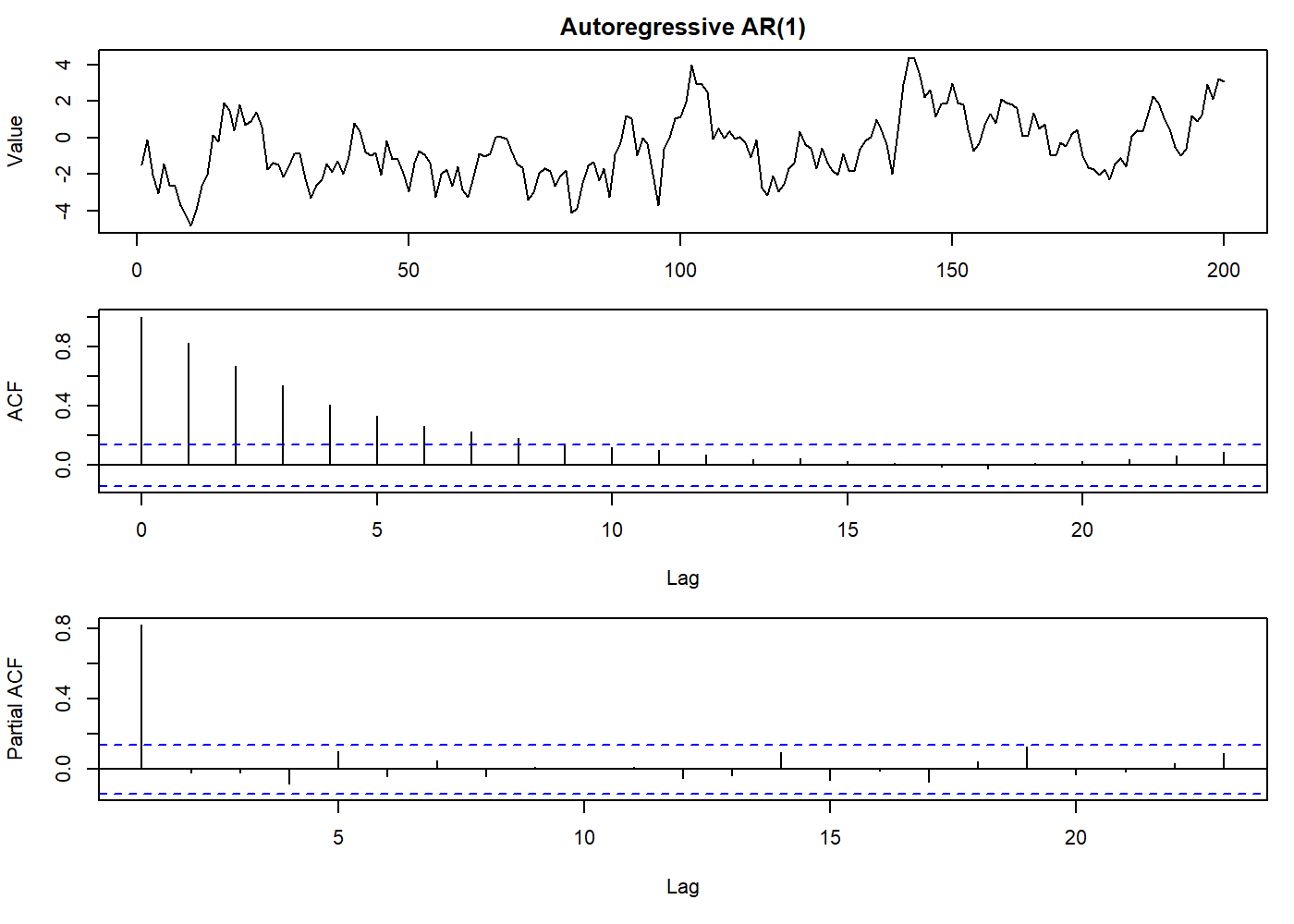

4.3.4 Autoregressive processes

In the previous section about the moving average process, we imagined the process as an experiment where you had an impulse applied to a random process with a finite time span influence.

Here we can think of an experiment where this impulse persists in time, but its influence is not visible immediately, but we see it as a repeatable pattern over and over.

This also implies that the

Again, we will say that

In contrast with the

4.3.4.1 First-order process

For a better understanding, we will analyze the first-order case, for

The

and eventually, we find that

This duality between

so that5

Comparing with the previous solution for moving average process, we see that

The variance is finite if we assume that

The ACVF is given by

For

The ACF may also be obtained more simply by assuming a priori that the process is stationary, in which case

assuming that

Now

The above method of obtaining the ACF commonly used, over the assumption that the time series is stationary.

oldpar <- par(mfrow = c(3, 1), mar = c(3.1, 4.1, 2.1, 1.1))

set.seed(2021)

auto_reg <- arima.sim(list(order = c(1,0,0), ar = 0.8), n = 200)

plot.ts(auto_reg, main = "Autoregressive AR(1)", ylab = "Value")

par(mar = c(5.1, 4.1, 0.1, 1.1))

acf(auto_reg)

par(mar = c(5.1, 4.1, 0.1, 1.1))

pacf(auto_reg)

par(oldpar)

4.3.4.2 General-order case

In the general-order case, the same property of the first-order case stands true: an

or

where

The relationship between the

We can simply state that if

Yule-Walker equations

We can, in principle, find the ACF of the general-order

These equations composes the group of equations called the Yule-Walker equations named after G. Yule and G. Walker. Which has the general form

where

The constants

Stationarity conditions

From the general form of

We can also say that if the roots of the following equation lie outside the unit circle the process is stationary (Box and Jenkins, 1970, Section 3.2)

Of particular interest is the

Thus if

from which the stationarity region is the triangular region satisfying

The roots are real if

When the roots are real, the constants

From the first of the Yule-Walker equations, we have

Thus

Hence we find

The

This does not affect the ACF.

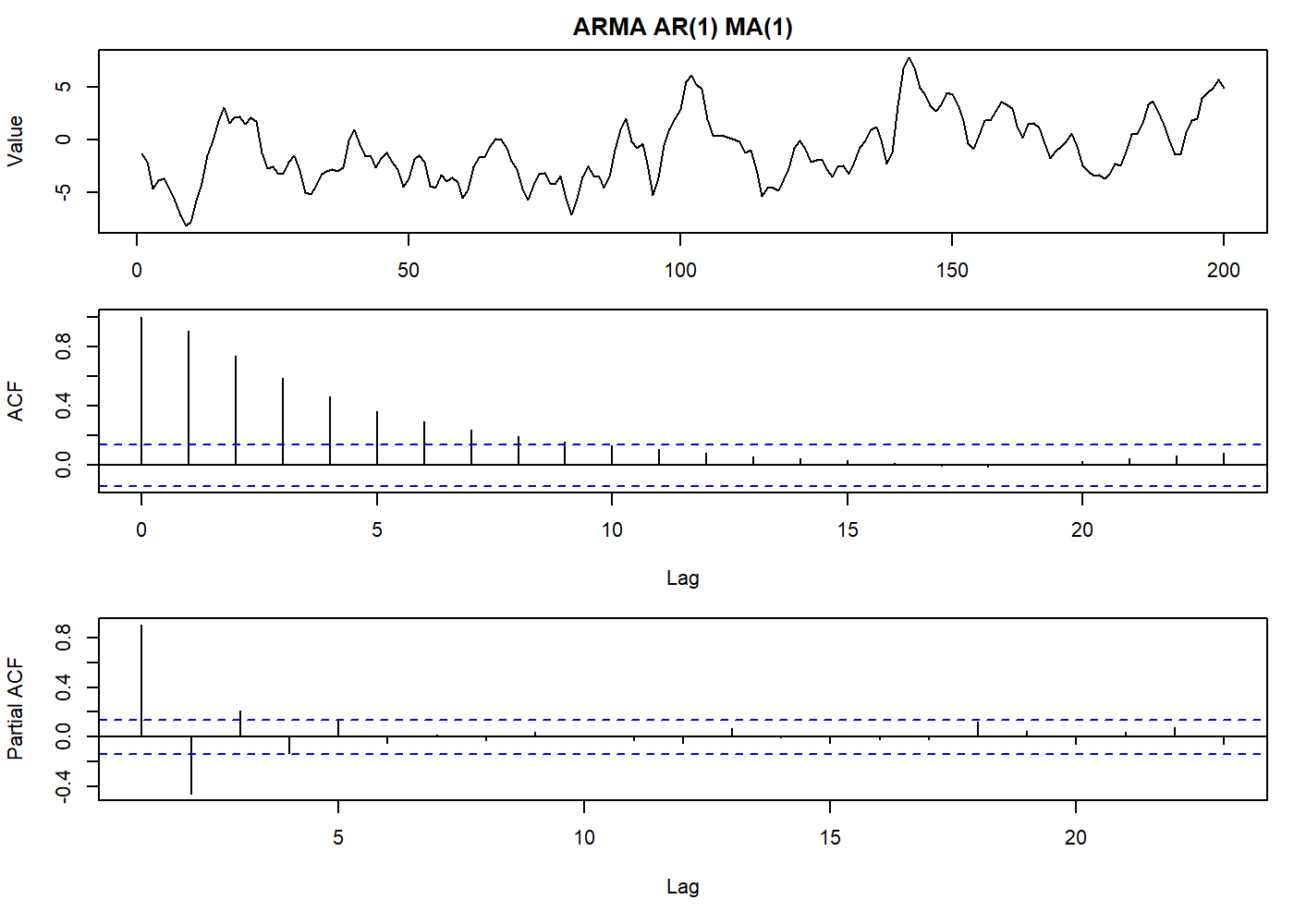

4.3.5 Mixed

Using the previous knowledge of

Using the backward shift operator

where

and

4.3.5.1 Stationarity and invertibility conditions

The values of

lie outside the unit circle.

While the values of

lie outside the unit circle.

In this article we will not explain how to compute the ACF for an

But in short, for the

set.seed(2021)

oldpar <- par(mfrow = c(3, 1), mar = c(3.1, 4.1, 2.1, 1.1))

stoch_arma <- arima.sim(list(order = c(1,0,1), ma = 0.8, ar = 0.8), n = 200)

plot.ts(stoch_arma, main = "ARMA AR(1) MA(1)", ylab = "Value")

par(mar = c(5.1, 4.1, 0.1, 1.1))

acf(stoch_arma)

par(mar = c(5.1, 4.1, 0.1, 1.1))

pacf(stoch_arma)

par(oldpar)

And the general case, we have

Our primary objective here is to see how we can describe a stationary time series using an

4.3.5.2 The

It is clearer to express an

Or a pure

where

Moving around the functions we can see lots of equities:

By convention we write

4.3.6 Integrated

In real life, most of the time series we have are non-stationary. This means we have to first remove this source of variation before working with the models we have seen until now, or, we use another composition that already takes in account the non-stationarity. As suggested in section 3.2.3, we can difference the time series to turn it stationary.

Formally we replace

Here we define the

the general autoregressive integrated moving average process (abbreviated

By analogy with equation (4.3.5.1a), we may write equation (4.3.6.1) in the form

or

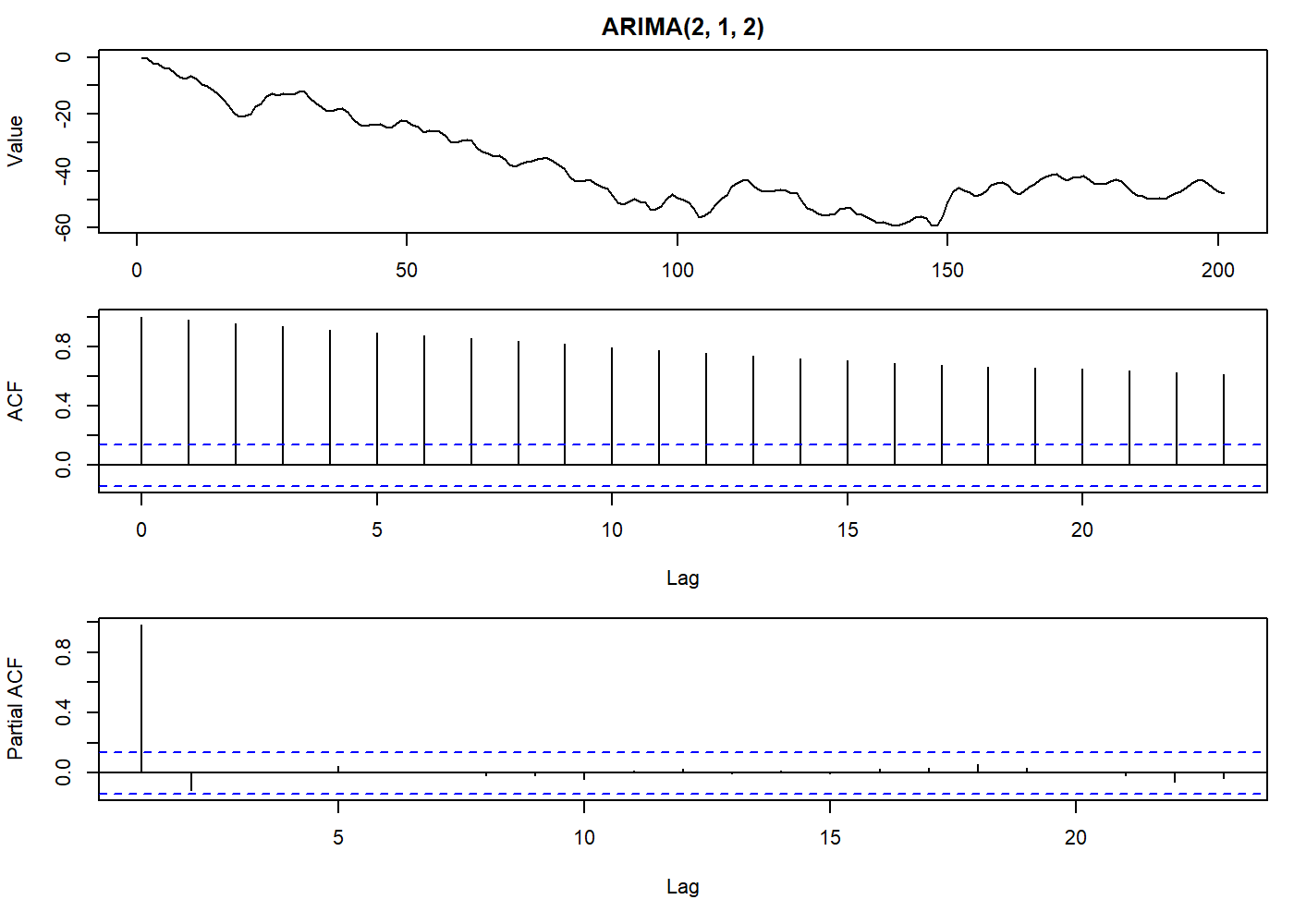

oldpar <- par(mfrow = c(3, 1), mar = c(3.1, 4.1, 2.1, 1.1))

set.seed(2021)

stoch_arma <- arima.sim(list(order = c(2,1,2), ma = c(-0.2279, 0.2488), ar = c(0.8897, -0.4858)), n = 200)

plot.ts(stoch_arma, main = "ARIMA(2, 1, 2)", ylab = "Value")

par(mar = c(5.1, 4.1, 0.1, 1.1))

acf(stoch_arma)

par(mar = c(5.1, 4.1, 0.1, 1.1))

pacf(stoch_arma)

par(oldpar)

Thus we have an

Glossary

Expected value or expectation

Backwards shift operator:

Period of time, lag

Transformation parameter or some other constant

Mean

Variance

Correlogram coefficient at lag index ‘k’

Difference operator

Autocovariance of a period of time:

Autocorrelation of a period of time:

References

Usually, analog signals are “digitized” by reading the value at discrete intervals. The pitfalls of this approach are beyond this article.↩︎

Treatment of outliers is another field of research and out of the scope of this article. An outlier may be a perfectly valid but extreme value, or a sensor that has gone entirely wild, or even a strange observation that may or not repeat for some reason. So common sense is as important as the theory.↩︎

For those looking for more trouble: the moving average process, despite what was said about the moving average algorithm, is, in fact, a (weighted) moving average of a random process! Try this at home: simulate a

In the R language, we often see the inverse roots when we plot an ARIMA model. In this case, we will see the roots inside the unit circle when the process is invertible.↩︎

Remember the power series where